Speech interfaces: UI revolution or intelligent evolution?

Speech interfaces have received a lot of attention recently, especially with the marketing blitz for Siri, the new speech interface for the iPhone.

Speech interfaces have received a lot of attention recently, especially with the marketing blitz for Siri, the new speech interface for the iPhone.After watching some of the TV commercials you might conclude that you can simply talk to your phone as if it were your friend, and it will figure out what you want. For example, in one scenario the actor asks the phone, “Do I need a raincoat?”, and the phone responds with weather information.

A colleague commented that if he wanted weather information he would just ask for it. As in “What is the weather going to be like in Seattle?” or “Is it going to rain in Seattle?”.

Without more conversational context, if a friend were to ask me, “Do I need a raincoat?”, I would probably respond, “I don’t know, do you?” — jokingly, of course.

Evo or revo?

|

| Are we ready to converse with our phones and cars? |

Possibly. But I think it will be more of a UI evolution than a UI revolution. In other words, speech interfaces will play a bigger role in UI designs, but that doesn't mean you're about to start talking to your phone — or any other device — as if it’s your best friend.

Currently, speech interfaces are underutilized. The reasons for this aren't yet clear, though they seem to encompass both technical and user issues. Traditionally, speech recognition accuracy rates have been less than perfect. Poor user interface design (for instance, reprompting strategies) has contributed to the overall problem and to increased user frustration.

Also, people simply aren't used to speech interfaces. For example, many phones support voice-dialing, yet most people don't use this feature. And user interface designers seem reluctant to leverage speech interfaces, possibly because of the additional cost and complexity, lack of awareness, or some other reason.

to a suboptimal user experience..."

As a further complication, relying heavily on speech as an interface can lead to a suboptimal user experience. Speech interfaces pose some real challenges, including recognition accuracy rates, natural language understanding, error recovery dialogs, UI design, and testing. They aren't the flawless wonders that some marketers would lead you to believe.

Still, I believe there is a happy medium for leveraging speech interfaces as part of a multi-modal interface — one that uses speech as an interface where it makes sense. Some tasks are better suited for a speech interface, while others are not. For example, speech provides an ideal way to provide input to an application when you can capitalize on information stored in the user’s head. But it’s much less successful when dealing with large lists of unfamiliar items.

Talkin' to your ride

Other factors, besides Apple, are driving the growing role of speech interfaces — particularly in automotive. Speech interfaces can, for example, help address the issue of driver distraction. They allow drivers to keep their “eyes on the road and hands on the wheel,” to quote an oft-used phrase.

So, will we see a paradigm shift towards speech interfaces? It's unlikely. I'm hoping, though, that we'll see a UI evolution that makes better use of them.

Think of it more as a paradigm nudge than a paradigm shift.

Recommended reading

Situation Awareness: a Holistic Approach to the Driver Distraction Problem

Wideband Speech Communications for Automotive: the Good, the Bad, and the Ugly

Review: Drop Stop Car Wedge

Drop Stop Car Wedge is a simple product that does one thing very well. It blocks the gap between your car seat and the center console, so that you can't drop stuff down into that hard to reach spot.

I have been using one, and It works great. I do notice that I can feel the right side of my seat is button cushion is a little bit firmer due to compression, but it doesn't bother me.

The wedge is a tube made of black neoprene, with a pass-through for the seatbelt latch. It is stuffed with filler. To install it, you slip it over your seatbelt latch and then stuff it down, working it forward and back smooth it out.

At $20/pair plus shipping, it isn't cheap, but isn't outrageously expensive either. It should outlast your vehicle.

At $20/pair plus shipping, it isn't cheap, but isn't outrageously expensive either. It should outlast your vehicle.Pros:

- Simple, effective

- Unobtrusive

- Seems durable

Cons:

- Unknown country of origin (not on packaging)

- $20+shipping is a little steep for what is basically a stuffed fabric tube

- Limited distribution (Can't buy it on Amazon, etc.)

- Adds firmness to right seat cushion side

*Note: DropStop did not provide any payment for this review, other than sending me a test unit.

Enabling the next generation of cool

Capturing QNX presence in automotive can’t be done IMHO without a nod to our experience in other markets. Take, for example, the extreme reliability required for the International Space Station and the Space Shuttle. This is the selfsame reliability that automakers rely on when building digital instrument clusters that cannot fail. Same goes for the impressive graphics on the BlackBerry Playbook. As a result, Tier1s and OEMs can now bring consumer-level functionality into the vehicle.

Multicore is another example. The automotive market is just starting to take note while QNX has been enabling multi-processing for more than 25 years.

So I figure that keeping our hand in other industries means we actually have more to offer than other vendors who specialize.

I tried to capture this in a short video. It had to be done overnight so it’s a bit of a throw-away but (of course) I'd like to think it works. :-)

Multicore is another example. The automotive market is just starting to take note while QNX has been enabling multi-processing for more than 25 years.

So I figure that keeping our hand in other industries means we actually have more to offer than other vendors who specialize.

I tried to capture this in a short video. It had to be done overnight so it’s a bit of a throw-away but (of course) I'd like to think it works. :-)

QNX and Freescale talk future of in-car infotainment

|

| Paul Leroux |

If you've read any of my blog posts on the QNX concept car (see here, here, and here), you've seen an example of how mixing QNX and Freescale technologies can yield some very cool results.

So it's no surprise that when Jennifer Hesse of Embedded Computing Design wanted to publish an article on the challenges of in-car infotainment, she approached both companies. The resulting interview, which features Andy Gryc of QNX and Paul Sykes of Freescale, runs the gamut — from mobile-device integration and multicore processors to graphical user interfaces and upgradeable architectures. You can read it here.

When will I get apps in my car?

I read the other day that Samsung’s TV application store has surpassed 10 million app downloads. That got me thinking: When will the 10 millionth app download occur in the auto industry as a whole? (Let’s not even consider 10 million apps for a single automaker.)

There’s been much talk about the car as the fourth screen in a person’s connected life, behind the TV, computer, and smartphone. The car rates so high because of the large amount of time people spend in it. While driving to work, you may want to listen to your personal flavor of news, listen to critical email through a safe, text-to-speech email reader, or get up to speed on your daily schedule. When returning home, you likely want to unwind by tapping into your favorite online music service. Given the current norm of using apps to access online content (even if the apps are a thin disguise for a web browser), this begs the question — when can I get apps in my car?

A few automotive examples exist today, such as GM MyLink, Ford Sync, and Toyota Entune. But app deployment to vehicles is still in its infancy. What conditions, then, must exist for apps to flourish in cars? A few stand out:

Cars need to be upgradeable to accept new applications — This is a no-brainer. However, recognizing that the lifespan of a car is 10+ years, it would seem that a thin client application strategy is appropriate.

Established rules and best practices to reduce driver distraction — These must be made available to, and understood by, the development community. Remember that people drive cars at high speeds and cannot fiddle with unintuitive, hard-to-manipulate controls. Apps that consumers can use while driving will become the most popular. Apps that can be used only when the car is stopped will hold little appeal.

A large, unfragmented platform to attract a development community — Developers are more willing to create apps for a platform when they don't have to create multiple variants. That's why Apple maintains a consistent development environment and Google/Android tries to prevent fragmentation. Problem is, fragmentation could occur almost overnight in the automotive industry — imagine 10 different automakers with 10 different brands, each wanting a branded experience. To combat this, a common set of technologies for connected automotive application development (think web technologies) is essential. Current efforts to bring applications into cars all rely on proprietary SDKs, ensuring fragmentation.

Other barriers undoubtedly exist, but these are the most obvious.

By the way, don’t ask me for my prediction of when the 10 millionth app will ship in auto. There’s lots of work to be done first.

There’s been much talk about the car as the fourth screen in a person’s connected life, behind the TV, computer, and smartphone. The car rates so high because of the large amount of time people spend in it. While driving to work, you may want to listen to your personal flavor of news, listen to critical email through a safe, text-to-speech email reader, or get up to speed on your daily schedule. When returning home, you likely want to unwind by tapping into your favorite online music service. Given the current norm of using apps to access online content (even if the apps are a thin disguise for a web browser), this begs the question — when can I get apps in my car?

|

| Entune takes a hands-free approach to accessing apps. |

Cars need to be upgradeable to accept new applications — This is a no-brainer. However, recognizing that the lifespan of a car is 10+ years, it would seem that a thin client application strategy is appropriate.

Established rules and best practices to reduce driver distraction — These must be made available to, and understood by, the development community. Remember that people drive cars at high speeds and cannot fiddle with unintuitive, hard-to-manipulate controls. Apps that consumers can use while driving will become the most popular. Apps that can be used only when the car is stopped will hold little appeal.

A large, unfragmented platform to attract a development community — Developers are more willing to create apps for a platform when they don't have to create multiple variants. That's why Apple maintains a consistent development environment and Google/Android tries to prevent fragmentation. Problem is, fragmentation could occur almost overnight in the automotive industry — imagine 10 different automakers with 10 different brands, each wanting a branded experience. To combat this, a common set of technologies for connected automotive application development (think web technologies) is essential. Current efforts to bring applications into cars all rely on proprietary SDKs, ensuring fragmentation.

Other barriers undoubtedly exist, but these are the most obvious.

By the way, don’t ask me for my prediction of when the 10 millionth app will ship in auto. There’s lots of work to be done first.

SOLD - AE 86 - Levin Half Cut

SOLD To Local Customer

This Half Cut AE 86 - Engine 4A-GE - Manual , Selling Together With One Set Aftermarket Rims ( Front And Rear Different Design ) , Long Shaft , Rear Axle, One Set Tail-Lamps And Spoiler - Remark :- Speedometer - Missing

Selling As Is Where Is Basis

To View Engine Reeving, Click Video Below :-

General View Of The Half Cut

Engine Tag Information

Chassis Number

Front View Of The Engine Bay

Side View Of The Engine Bay

Side View Of The Engine Bay

Inner Part Of The Engine

General View Of The Undercarriage

Side View Of The Gear Box

Side View Of The Gear Box

Rear View Of The Manual Gear Box

Driver Side - Head Lamp And Side Lamp - Good Condition

Driver Side - Fender And Bonnet - Red Circle Indicate Dented Area

Bonnet - Closed View Of The Dented Area

Driver Side - Fender - Close View Of The Top Section - Dented

Passenger Side - Head Lamp - Good Condition

Passenger Side - Side Lamp - Cracked

Passenger Side - Red Circle Indicate Dented Area

Passenger Side - Fender - Close View Of The Top Section - Dented

Speedometer - Missing

Rear Spoiler And Tail Lamps

General View Of The Rims

General View Of The Front Rims With Tires

Front Rim - Brand - BRIDGESTONE

Front Tire Size :- 175/70 R 13

General View Of The Rear Rims With Tires

Rear Rim - Brand - ADVAN

Rear Tire Size :- 175/65 R 14

Rear Axle With Brake Cables

Long Shaft / Rear Absorbers With Spring

General View Of The Half Cut

This Half Cut AE 86 - Engine 4A-GE - Manual , Selling Together With One Set Aftermarket Rims ( Front And Rear Different Design ) , Long Shaft , Rear Axle, One Set Tail-Lamps And Spoiler - Remark :- Speedometer - Missing

Selling As Is Where Is Basis

To View Engine Reeving, Click Video Below :-

General View Of The Half Cut

Engine Tag Information

Chassis Number

Front View Of The Engine Bay

Side View Of The Engine Bay

Side View Of The Engine Bay

Inner Part Of The Engine

General View Of The Undercarriage

Side View Of The Gear Box

Side View Of The Gear Box

Rear View Of The Manual Gear Box

Driver Side - Head Lamp And Side Lamp - Good Condition

Driver Side - Fender And Bonnet - Red Circle Indicate Dented Area

Bonnet - Closed View Of The Dented Area

Driver Side - Fender - Close View Of The Top Section - Dented

Passenger Side - Head Lamp - Good Condition

Passenger Side - Side Lamp - Cracked

Passenger Side - Red Circle Indicate Dented Area

Passenger Side - Fender - Close View Of The Top Section - Dented

Speedometer - Missing

Rear Spoiler And Tail Lamps

General View Of The Rims

General View Of The Front Rims With Tires

Front Rim - Brand - BRIDGESTONE

Front Tire Size :- 175/70 R 13

General View Of The Rear Rims With Tires

Rear Rim - Brand - ADVAN

Rear Tire Size :- 175/65 R 14

Rear Axle With Brake Cables

Long Shaft / Rear Absorbers With Spring

General View Of The Half Cut

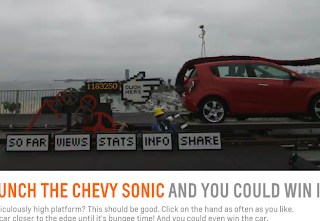

Sonic Drop Breaks Down?

Chevy is doing a stunt to promote the new Sonic b-car. They are dropping it off of a huge tower attached to a bungee cord, and using web clicks to move it towards the edge of the leap.

Looks like it broke, though.

I hope those guys are careful.

Marking over 5 years of putting HTML in production cars

Think back to when you realized the Internet was reaching beyond the desktop. Or better yet, when you realized it would touch every facet of your life. If you haven’t had that second revelation yet, perhaps you should read my post about the Twittering toilet.

Think back to when you realized the Internet was reaching beyond the desktop. Or better yet, when you realized it would touch every facet of your life. If you haven’t had that second revelation yet, perhaps you should read my post about the Twittering toilet.For me, the realization occurred 11 years ago, when I signed up with QNX Software Systems. QNX was already connecting devices to the web, using technology that was light years ahead of anything else on the market. For instance, in the late 90s, QNX engineers created the “QNX 1.44M Floppy,” a self-booting promotional diskette that showcased how the QNX OS could deliver a complete web experience in a tiny footprint. It was an enormous hit, with more than 1 million downloads.

|

| Embedding the web, dot com style: The QNX-powered Audrey |

At the time, Don Fotsch, one of Audrey’s creators, coined the term “Internet Snacking” to describe the device’s browsing environment. The dot com crash in 2001 cut Audrey’s life short, but QNX maintained its focus on enabling a rich Internet experience in embedded devices, particularly those within the car.

The point of these stories is simple: Embedding the web is part of the QNX DNA. At one point, we even had multiple browser engines in production vehicles, including the Access Netfront engine, the QNX Voyager engine, and the OpenWave WAP Browser. In fact, we have had cars on the road with Web technologies since model year 2006.

With that pedigree in enabling HTML in automotive, we continue to push the envelope. We already enable unlimited web access with full browsers in BMW and other vehicles, but HTML in automotive is changing from a pure browsing experience to a full user experience encompassing applications and HMIs. With HTML5, this experience extends even to speech recognition, AV entertainment, rich animations, and full application environments — Angry Birds anyone?

People often now talk about “App Snacking,” but in the next phase of HTML 5 in the car, it will be "What’s for dinner?”!

BBDevCon — Apps on BlackBerry couldn't be better

Unfortunately I joined the BBDevCon live broadcast a little too late to capture some of the absolutely amazing TAT Cascades video. RIM announced that TAT will be fully supported as a new HMI framework on BBX (yes, the new name of QNX OS for PlayBook and phones has been officially announced now). The video was mesmerizing — a picture album with slightly folded pictures falling down in an array, shaded and lit, with tags flying in from the side. It looked absolutely amazing, and it was created with simple code that configured the TAT framework "list" class with some standard properties. And there was another very cool TAT demo that showed an email filter with an active touch mesh, letting you filter your email in a very visual way. Super cool looking.

HTML5 support is huge, too — RIM has had WebWorks and Torch for a while, but their importance continues to grow. HTML5 apps provide the way to unify older BB devices and any of the new BBX-based PlayBooks and phones. That's a beautiful tie-in to automotive, where we're building our next generation QNX CAR software using HTML5. The same apps running on desktops, phones, tablets, and cars? And on every mobile device, not just one flavor like iOS or Android? Sounds like the winning technology to me.

Finally, they talked about the success of App World. There were some really nice facts to constrast with the negative press RIM has received on "apps". First some interesting comparisons: 1% of Apple developers made more than $1000, but 13% of BlackBerry developers made more than $100,000. Whoa. And that App World generates the 2nd most amount of money — more than Android. Also very interesting!

I can't do better than the presenters, so I'll finish up with some pics for the rest of the stats...

HTML5 support is huge, too — RIM has had WebWorks and Torch for a while, but their importance continues to grow. HTML5 apps provide the way to unify older BB devices and any of the new BBX-based PlayBooks and phones. That's a beautiful tie-in to automotive, where we're building our next generation QNX CAR software using HTML5. The same apps running on desktops, phones, tablets, and cars? And on every mobile device, not just one flavor like iOS or Android? Sounds like the winning technology to me.

Finally, they talked about the success of App World. There were some really nice facts to constrast with the negative press RIM has received on "apps". First some interesting comparisons: 1% of Apple developers made more than $1000, but 13% of BlackBerry developers made more than $100,000. Whoa. And that App World generates the 2nd most amount of money — more than Android. Also very interesting!

I can't do better than the presenters, so I'll finish up with some pics for the rest of the stats...

Subscribe to:

Comments (Atom)